YOUR BRAIN, THE REMOTE: AI'S LEAP IN PROSTHETIC TECHNOLOGY

By Pooja Kumar and Nithyaanagha M

A prosthetic is the highest act of empathy. The desire to feel and appear normal, is understood and accommodated when a prosthetic is designed and applied. Losing a limb should never limit people's willpower - their tremendous passion to move things has created magnificent innovations like prosthetics that empower them to do more and surpass physical challenges.

As the use case of intelligent systems is always expanding - from creative tasks like writing a movie script to analytical ones, like predicting the risk of stroke in patients - these complex and ever-evolving programs have also been introduced to the world of prosthetics. In this article, we discuss the involvement of AI in prosthetics and their ability to directly translate thoughts into physical action, which is a concept that has captivated science fiction for decades. Now, we're seeing it become a reality. The potential to restore not just movement but also a sense of wholeness to individuals who have lost limbs is profoundly moving. The fact that AI is the catalyst that allows this to happen, makes it that much more interesting.

How do you begin moving your feet forward? Your brain recognizes the need for movement, processes that information and sends electrical impulses charging down your nervous system at very quick speeds.

However, if you ask a machine, It'll begin by calculating the distance and speed it must move at - It thinks, because we gave it that power. It learns from what we teach it, so logically, we have to teach it everything. Unless there are self-learning capabilities. THAT trait is incredibly valuable and is taken complete advantage in this instance.

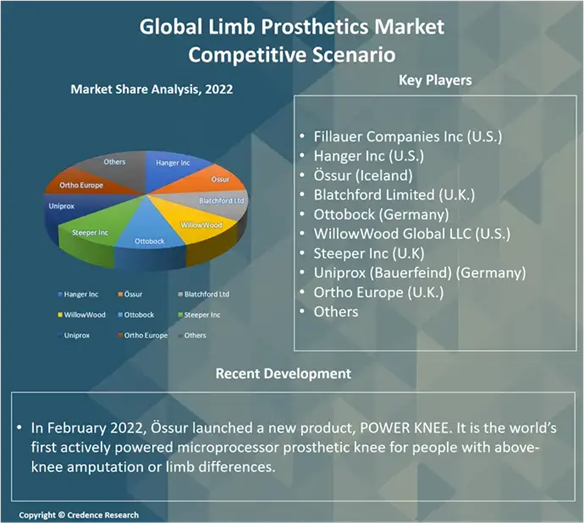

In a paper examining the same, it highlights "Chas. A. Blatchford & Sons of Great Britain a company that introduced the first commercially available microprocessor - controlled prosthetic knee, called the Intelligent Prosthesis, in 1993". And the paper states "It improved the natural look and feel of walking with a prosthetic". Several upgraded versions came soon after; the Intelligent Prosthesis Plus, released in 1995 and the Adaptive Prosthesis, in 1998_._ This later model included hydraulic and pneumatic controls along with a microprocessor_. "_Computerized knees are a newer type of prosthetic technology. They learn the user's walking characteristics and have sensors to measure timing, force, and swing." [1]

Some exploration on computerized protheses:

Myoelectric prosthesis

Retinal Prosthesis

Retinal prosthesis or bionic eyes, convert light energy - wavelength - to electrical signals upon receiving it and transmit it down the optic nerve to the brain. The brain is able to read these signals to "create" something we understand as images. [4]

These prosthetics aren't meant just for people with complete blindness, or those that have suffered diseases that have left them visually challenged, but for ones that experience red-green colour blindness etc., and combining AI/ML may optimize visual processing and help restore partial vision even.

"Additionally, AI improves retinal prosthetics by combining neural networks with computer vision techniques to refine facial features, enhance environmental representation, and ensure safety. In hearing prosthetics, AI, machine learning, and neural networks enable devices to adapt to individual hearing needs and background noise environments.". [1]

Current Ongoing Research:

Advanced AI Algorithms: Researchers are continuously refining AI algorithms to improve the accuracy and speed of neural signal decoding. For Example, researchers at John Hopkins University have been working on algorithms that can decode complex finger movements, allowing for more precise control.

- Improved Electrode Technology: Efforts are underway to develop more durable and biocompatible electrodes that can capture higher-resolution neural signals. Researchers at Brown University are working on flexible, mesh-like electrodes that can conform to the brain's surface.

- Sensory Feedback: Creating realistic sensory feedback is a major challenge. Researchers are exploring methods to stimulate the sensory cortex, allowing users to feel touch, pressure, and temperature. For example, researchers at the University of Pittsburgh have demonstrated that electrical stimulation of the sensory cortex can evoke sensations of touch in amputees.

- Non-Invasive BMIs: While invasive BMIs offer higher signal quality, non-invasive methods, such as EEG (electroencephalography), are being improved to make the technology more accessible. Companies like Synchron are working on less invasive methods that can be placed through blood vessels.

Case Study

Decoding Thought - The Breakthrough of BMI Prosthetics

1. Ongoing research and applications

Researchers have built on the principle of myoelectric prosthesis to develop sophisticated systems that can detect, interpret, and translate these signals into commands for a robotic limb based on electric signals generated by the brain.

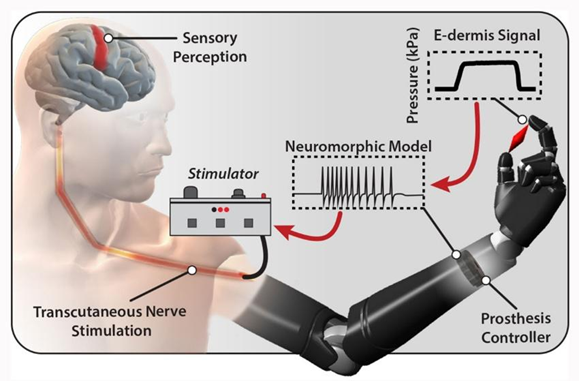

Electrodes are implanted in the brain (invasively) or placed on the scalp ( non invasively) for neural signals to be captured. These signals are then processed by AI-powered deep learning algorithms, which analyze complex neural patterns and identify specific signals associated with various movements. The system translates the decoded neural signals into commands. These commands control the prosthetic limb's motors and actuators, enabling precise movement. Sensors on the prosthetic limb provide feedback to the user, allowing them to adjust the prosthetic's position and move in real time, thus enhancing natural and intuitive control.

For individuals with severe paralysis, BMIs offer more than just a way to communicate—they provide a voice where there was once silence. The ability to express thoughts, emotions, and needs through technology can restore a profound sense of connection and autonomy, bridging the gap between isolation and the world around them.

2. Limitations

One of the major challenges in neural signal processing, especially with non-invasive methods, is the presence of signal noise, making it difficult to interpret neural activity accurately. Invasive brain-machine interfaces (BMIs), despite offering better precision, require surgical implantation, which comes with inherent risks and limitations. Additionally, training AI algorithms to decode neural signals effectively demands extensive calibration and user adaptation, adding to the complexity of implementation. Another significant hurdle lies in replicating realistic sensory feedback, as creating a natural sense of touch and proprioception remains a major challenge. Furthermore, implanted devices can face long-term stability issues, potentially affecting their reliability and performance over time.

3. Future Aspects/Plans:

1. Advancements in AI: Deep Learning and Signal Processing

Continued research in deep learning and other AI techniques will enhance signal processing and decoding accuracy, contributing considerably to the development of more sophisticated BMIs.

2. Minimally Invasive Techniques:

Developing less invasive or non-invasive BMI technologies will make these systems more accessible to a significantly wider range of users. Micro-engineered neuronal networks (MNNs), for instance, are playing a crucial role in advancing BMI technologies by providing a minimally disruptive interface with the brain.

Implementing closed-loop systems that provide real-time sensory feedback will improve the user's sense of embodiment and control over devices. This is evident in recent developments like the two-way adaptive brain-computer interface, which enhances communication efficiency by providing feedback directly to the brain.

Research into new biocompatible materials will improve the longevity and safety of implanted devices. This is essential for reducing inflammation and long-term damage associated with traditional electrodes.

Conclusions

The ability to directly translate thoughts into physical action is a concept that has captivated science fiction for decades. Now, we're seeing it become a reality. The potential to restore not just movement but also a sense of wholeness to individuals who have lost limbs is profoundly moving. The fact that AI is the catalyst that allows this to happen, makes it that much more interesting. From prosthetics that bring about movement by reading signals from the part of the limb that remains to enhancing vision in cases of complete, partial and colour blindness, AI has taken a massive step towards easing the burden off of people with impairments. With Brain-Muscle Interface (BMI) prosthetics being coupled with the advances in AI, the world of prosthetics as we know it, has positively transformed.

AI-powered smart prosthetics, represent a paradigm shift in the field of rehabilitation. While challenges remain, the rapid pace of innovation is paving the way for a future where amputees can experience seamless and intuitive control of their prosthetic limbs. The convergence of neuroscience, AI, and robotics is creating a world where the boundaries between human and machine are becoming increasingly blurred, offering hope and empowerment to millions.

Citations and References

- Johns Hopkins University. (Research on advanced finger movement decoding): https://hub.jhu.edu/2021/10/06/prosthetic-hand-research-ai/

- Brown University. (Research on flexible electrodes): https://www.brown.edu/news/2023-01-26/neuroelectronics

- University of Pittsburgh. (Research on sensory cortex stimulation): https://www.upmc.com/media/news/2021/prosthetic-arm-sensory-feedback

- Synchron: https://synchroninc.com/

- https://www.researchgate.net/publication/378907593_Application_of_Artificial_Intelligence_in_Prosthetics_A_Review

- https://www.globenewswire.com/news-release/2024/10/30/2971484/0/en/Ray-Zhao-s-Research-NeuroLimbAI-AI-Driven-EEG-Controlled-Haptic-Feedback-Prosthetic-Arm-Featured-in-XYZ-Media-s-Next-Generation-of-Innovators.html

- https://www.intechopen.com/chapters/73486